Streamlining Network Design with GPU-Accelerated Predictions

Share

Designing complex network infrastructures can be a time-consuming and intricate process, especially when optimizing for better efficiency and performance. Networks with multiple wireless services that span large areas such as stadiums or airports often pose significant challenges in terms of optimization and accuracy. Traditional methods relying solely on CPU calculations can struggle to handle the complexity efficiently.

The Challenge of Complex Network Design

Complex network designs require precise predictions and optimizations to ensure reliable wireless coverage and performance. However, traditional CPU-based calculations can become overwhelmed by the sheer volume of computations needed for large-scale projects.

Factors contributing to the complexity of network design include:

- Diverse Environments: Networks often cover diverse physical environments with varying characteristics, such as indoor areas, outdoor spaces, open areas, and densely populated zones. Each environment presents unique challenges for signal propagation and coverage planning.

- Multiple Wireless Services: Networks must support multiple wireless services concurrently, such as Wi-Fi, cellular, public safety communications, and IoT devices. Each service has specific requirements and performance criteria that must be met, adding complexity to network optimization.

- Optimal Antenna Placement: Determining the optimal locations for antennas to ensure adequate coverage and minimal interference is crucial yet challenging. Antenna placement significantly impacts signal strength, coverage area, and overall network performance.

- Signal Propagation Prediction: Predicting how signals will propagate within different environments, considering factors like building materials, terrain, and interference sources, is essential for achieving reliable coverage and performance.

- Capacity Planning: Designing networks to handle anticipated user loads and data traffic requires careful capacity planning. Balancing capacity with coverage and signal quality is critical to avoid network congestion and ensure seamless connectivity.

Leveraging GPU Acceleration for Efficient Design

To address these challenges, the integration of GPU (Graphics Processing Unit) acceleration using NVidia’s CUDA® cores into network design workflows emerges as a transformative solution. GPUs are highly efficient at handling parallel processing tasks, making them ideal for complex mathematical computations involved in network prediction and optimization. By offloading these computations to GPUs, the overall design process can be accelerated significantly.

This acceleration is especially apparent in demanding projects like stadiums, airports, and large buildings, which traditionally require extensive time for design and network coverage prediction. With GPU-accelerated predictions, the time required to accurately predict network configurations can be reduced by significant margins—potentially saving tens of hours in complex scenarios.

Understanding GPU Acceleration Mechanics

GPU acceleration harnesses the parallel processing capabilities of graphics cards to execute computations rapidly and efficiently. Unlike traditional CPUs optimized for sequential processing, GPUs are designed with thousands of cores that can perform multiple calculations simultaneously. This massively parallel architecture enables GPUs to tackle complex mathematical operations inherent in network prediction algorithms.

The fundamental principles of GPU acceleration include:

- Parallelism: GPUs excel at parallel processing, dividing tasks into smaller sub-tasks that can be executed concurrently across multiple cores. This allows for high throughput and significant speed improvements compared to CPUs.

- Data-Parallel Computing: GPU algorithms are optimized for data-parallel operations, where the same operation is performed simultaneously on different data elements. This approach is well-suited for tasks like matrix multiplications and signal processing in network predictions.

- Memory Bandwidth: GPUs have high memory bandwidth, enabling rapid access to data required for computations. This capability is crucial for handling large datasets efficiently during network design simulations.

Benefits of GPU Predictions

- Speed: GPUs excel at parallel processing of mathematical operations, allowing for significantly faster computations compared to CPUs. This speed improvement translates to quicker predictions and optimizations, reducing design iteration times.

- Scalability: GPU acceleration scales well with the complexity of network designs. As projects grow larger and more intricate, GPUs maintain performance levels, ensuring efficient processing even for demanding scenarios.

- Accuracy: By processing predictions rapidly, GPU acceleration enables users to evaluate multiple design scenarios efficiently. This iterative approach leads to more accurate optimizations and improved overall network performance.

- Efficiency: Leveraging GPUs for network predictions frees up CPU resources, allowing for smoother multitasking and enhanced system responsiveness during the design process.

iBwave’s Innovations in Network Design

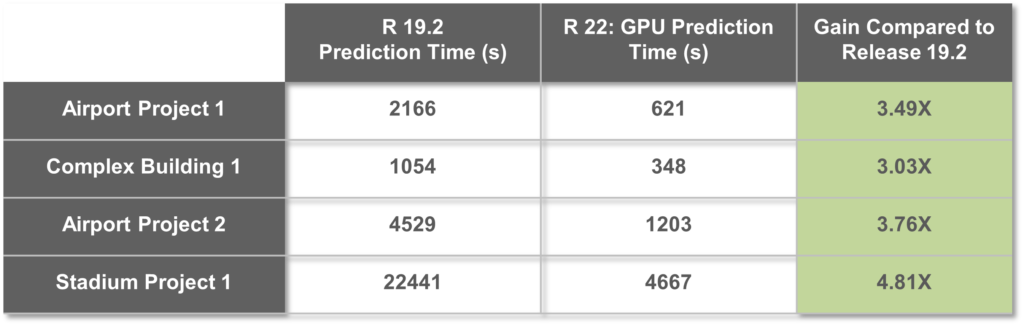

At iBwave, we have harnessed the power of GPU acceleration to revolutionize network design capabilities. Our platform leverages GPU-based prediction algorithms to achieve remarkable performance improvements. Predictions can now be completed up to 5 times faster compared to traditional CPU methods, enabling rapid optimizations and superior network planning. With these improvements, users can save even tens of hours on very complex projects with multiple wireless services, leading to increased efficiency.

With this enhanced speed, users have more time to dedicate to fine-tuning and optimizing their network designs. The ability to iterate quickly and efficiently results in better-performing wireless networks tailored to specific environments like stadiums, airports, and large buildings.

In the table below, you can see the prediction speed improvements across several complex projects with a single wireless service compared to iBwave Release 19.2. The time savings tend to scale with the project’s complexity and the number of wireless services.

Empowering Efficient Network Design

GPU acceleration represents a pivotal advancement in streamlining complex network designs. By harnessing the computational power of GPUs, we empower users with unprecedented efficiency and accuracy in wireless network planning. This technology revolutionizes the design process, enabling faster predictions and optimizations, ultimately leading to superior network performance.

Discover how this cutting-edge technology is transforming the way networks are planned and optimized for optimal performance and reliability. Embrace the future of network design with iBwave and experience the power of GPU-accelerated predictions.

To learn more about how iBwave leverages GPU acceleration to streamline network design processes and achieve remarkable performance improvements, visit our website.

Check out our blog for more tips and topics to learn more about wireless networks and their planning!

- Transforming Wireless Network Management with Digital Twins - April 2, 2025

- Top 3 Trends Shaping Network Design in 2025 - January 17, 2025

- Streamlining Network Design with GPU-Accelerated Predictions - May 21, 2024